So to summarize what is important for a SQA engineering team to have in mind while testing (read the first two parts here and here if you have not already):

- Strength of the code for this feature. If it breaks easily, and its existence is crucial, the whole system can break. Remember: A chain is as strong as its weakest link!

- Performance of the feature. Find the possible bottlenecks before the customer finds them. It is better to have a well documented and tested product limitation in the specifications instead of an angry customer or a furious manager.

- Recovery. If the feature breaks, can It restart and recover so it keeps working as best as possible with the scavenged data?

- Backward compatibility: Can the system work with another system with older version of this feature?

- Sensitivity. What happens if the feature receives non expected data from another feature and there is no failsafe mechanism?

Those are few rules of thumb you should have while working as SQA engineer. Most of them are written in the era of software development but are still quite valid and important.

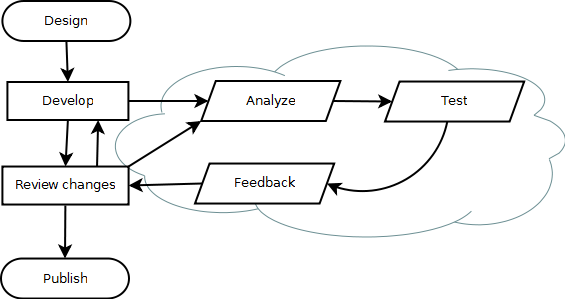

Running all those tests on every minor or major fix is quite time consuming and but be automated nowadays. The repetitive testing for a specific feature on every major or minor patch is called

Regression testing.

This kind of testing is done to check if the system is still sane after the newly compiled release and most of the test categories can be skipped.

It is important though to not miss testing the basics are working. So after the installation, data and feature compatibilities are automatically tested and some important tests as parameters check have the results, we go to the real regression tests. They often go without a plan and are an analysis of the patched bugs and their interoperability with old fixes:

- What if the new fixes are not working?

- What if the new fix opens new bug?

- What if the new fix breaks old fix?

This kind of testing is important if the final product is to be present without old bugs reappearing in the code, while we think they are already fixed. While this kind of test builds confidence in the feature stability in different versions, It is too much time consuming and can drive the attention away from real new bugs that can make it through blind spots of the whole test plan, so most of the regression testing is human-observing-automated. And while it is time-consuming and can’t catch all the bugs – it provides the needed confidence when testing new growing features in their development cycle. Most of those tests are set on a single system and can’t catch the big bugs in fully operational environment. That’s why there is

Stress testing.

What is important to test on a feature that is supposed to work on a heavily populated server or a high traffic network?

- Learn its boundaries and try to overwhelm them. e.g. Try to configure 4095 VLANs on a single port and flood all of them with traffic generator.

- Try to overflow a buffer. e.g. Try 1 million administrative logins to a device under testing.

- Try to flood the feature with enormous volume of data and see if the feature survives.

- Try how the feature will operate in spartan conditions like low Memory or high CPU.

While those tests are very importand, the test cases involved with them often catch one single bug in their whole life since the cases were originally written. That’s why we often do the so called

Exploration/exploitation testing.

We know the product, we know the feature, we know the code, we know EVERYTHING. That exactly is the bad part of our testing. The customer knows nothing except the configuration example in the manual. He needs the units for actual work, not for lab testing. So he/she starts building a big mesh-network of units and starts wiring them with cables and sets routing protocols. What happens? You guessed right – we missed some major blind spot. That’s why the exploitation testing is also very important.

- Often unknown bugs are found in other feature while we test some new feature. It’s important the person responsible for this other feature to be alarmed and a new test case to be added in his test plan so next time this blind spot missed by him to be retested.

- Sometimes the forum of your competitors or a news group can have some interesting bugs you can try to reproduce on the software or equipment you are developing.

- And it happens, when you test the feature again and again on every major release – the developers got some old buggy code pasted in the new feature so everything breaks. You should be vigilant about what and where enters as new feature and what possible break points it can have. If you are not aware – than you probably will not like the job at all.

The job description itself, may surprise you.